At any given moment all over the world, Twitter is filled with rich and vibrant conversations between people from a wide breadth of cultures. Twitter Aurora explores how these communities evolve and interact.

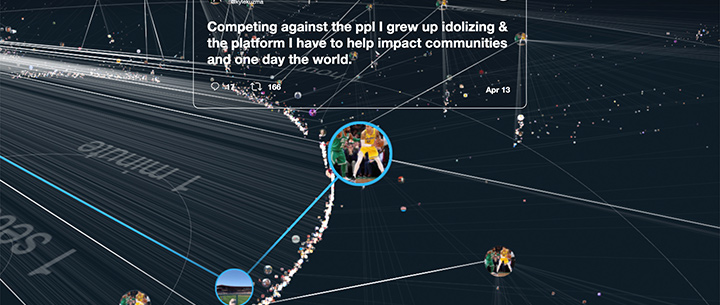

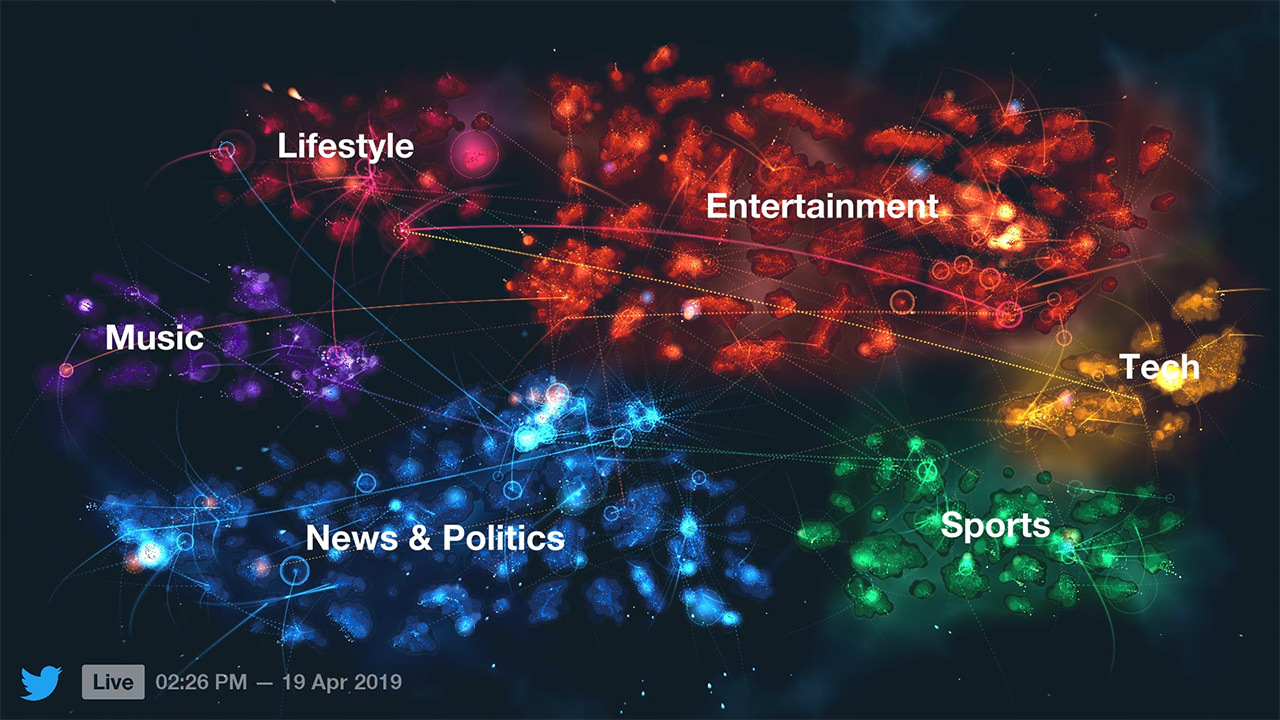

At its core, Twitter Aurora is a live map of Twitter. It visualizes conversations on the Twitter platform, and provides new ways to discover trends among diverse interest communities. It helps understand Twitter from a new viewpoint, beyond the timeline that we're used to. In that way, it helps Twitter tell a human story from a very complex data system.

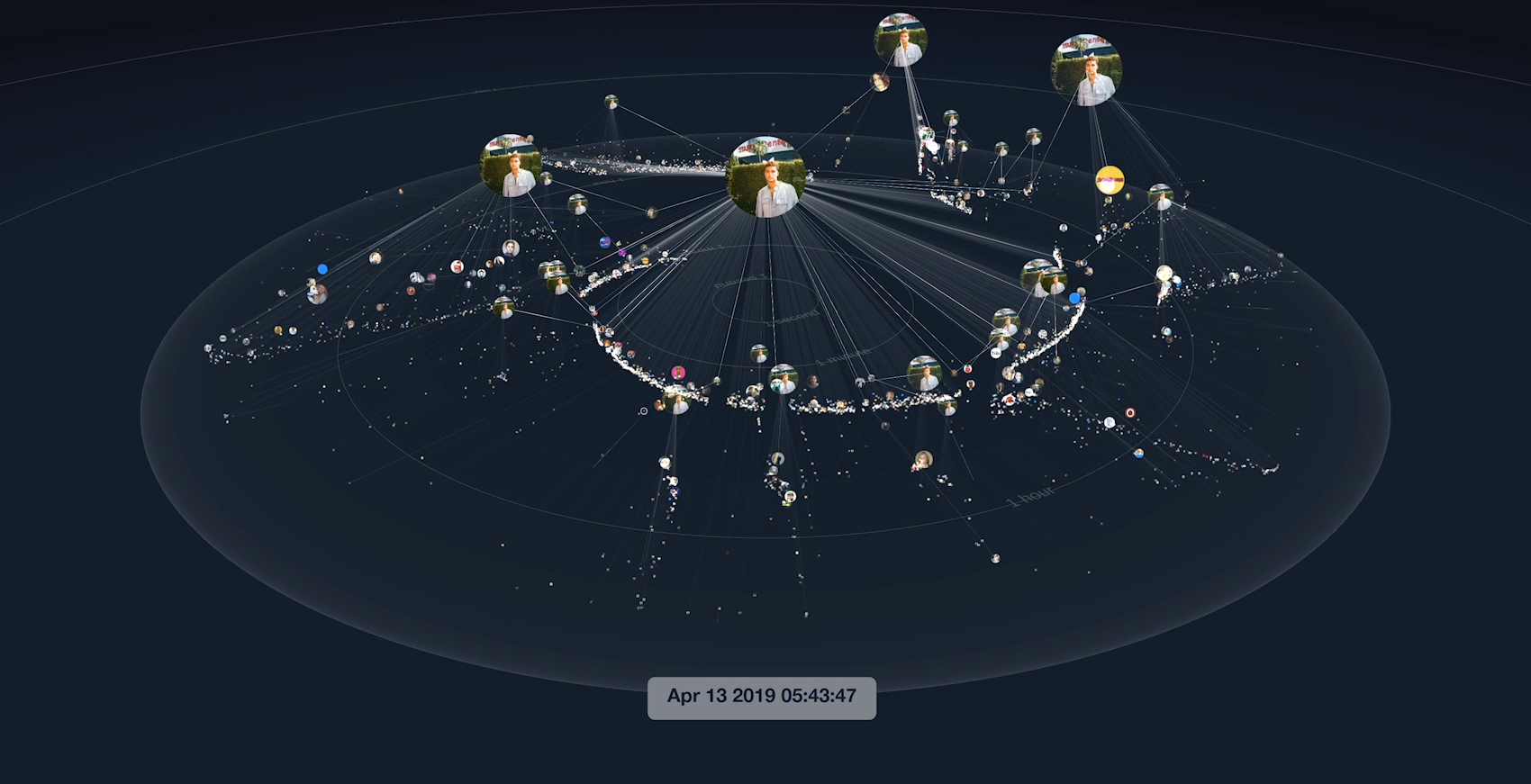

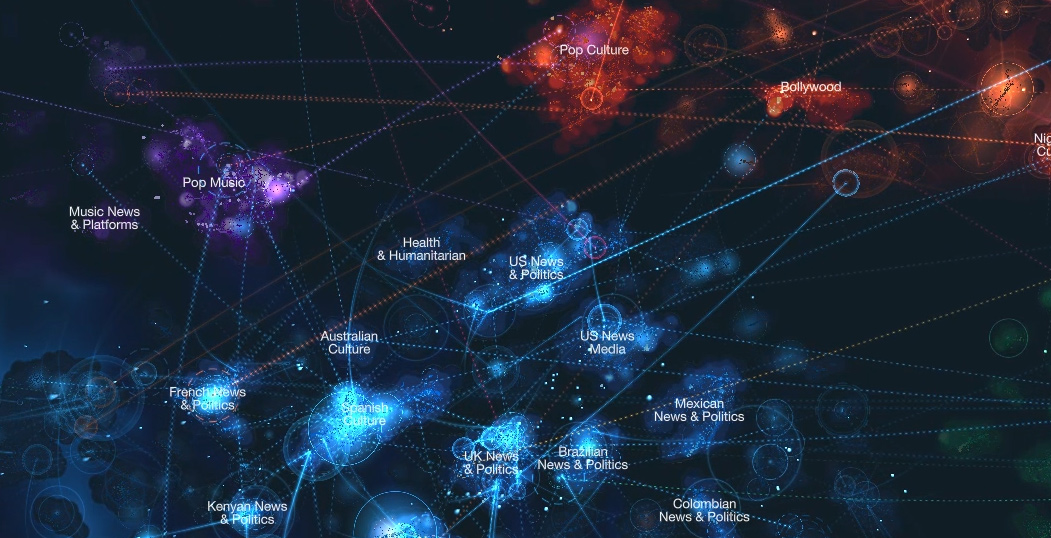

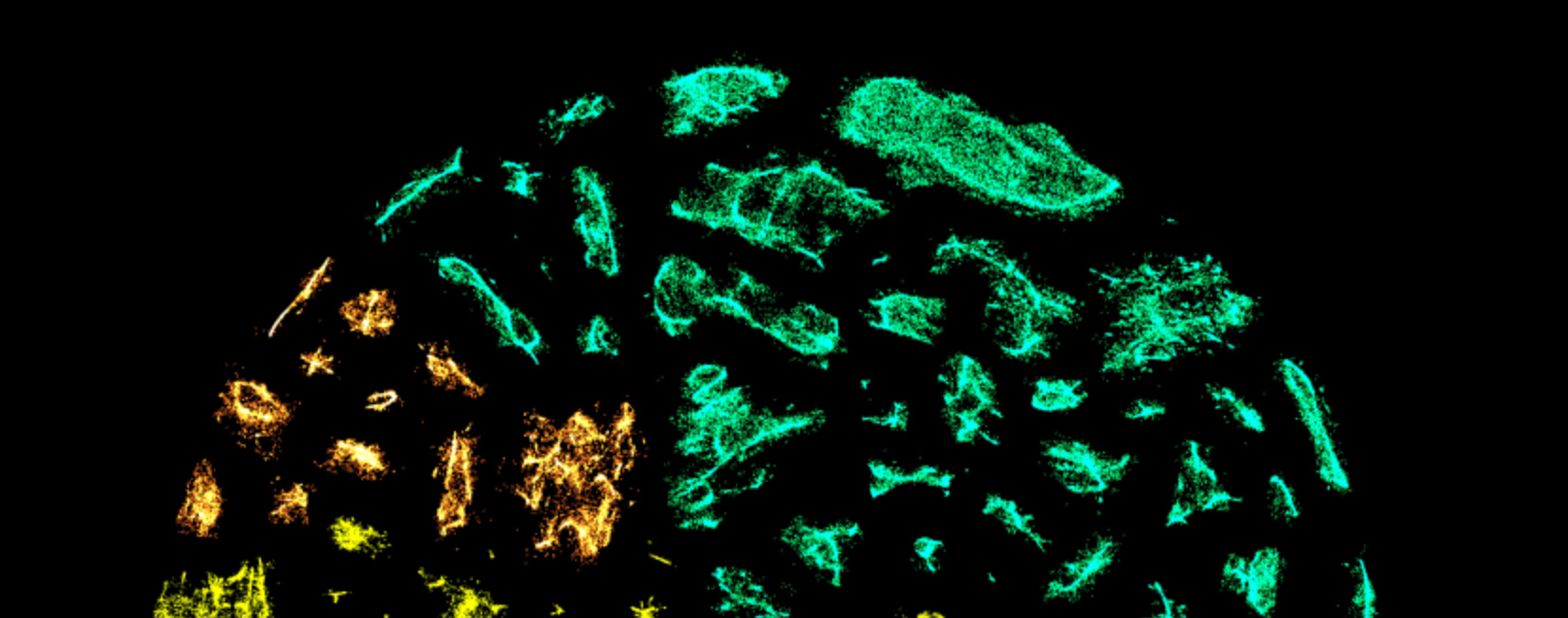

The Interest mode is the heart of the Aurora experience, where users are grouped into communities based on the followers they have in common. By arranging our data in community clusters, we can identify pockets of culture on the platform. Some of them are expected and widely accessible, like the Popular Culture cluster, or the NBA fans community. Others are unheard of from the mainstream, like the Indonesian LARPing community.

At high level, clusters are grouped together in continents that represent a number of 'passions' derived from Twitter branding: News & Politics, Entertainment, Music, Sports, and Technology. Within clusters, users appear close to those who share followers with them, and their altitude is mapped to their following, which creates unique topologies for each community. Aurora lets users navigate through this landscape of Twitter culture, and explore how different communities interact over time.

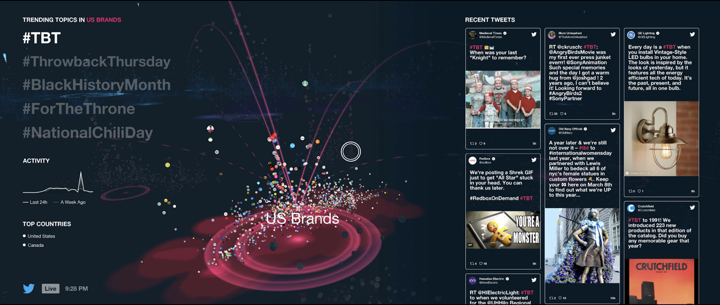

In Geo mode, we see a representation of our world; different countries and trending topics. Tweets shoot across the screen as topics are being discussed in real time. When users hover over a trending topic, countries that are having conversation about that trend/hashtag light up.

Twitter Aurora was exhibited at Twitter's headquarters in San Francisco, where people could experience it in person. The experience was immersive and interactive as people navigate through a live universe of interests and conversation from around the world, in real time.

The project also had an outlet on dozens of screens scattered in many offices around the world, where Twitter employees could see a passive version of the software summarizing current trends on the platform. It also was installed in many high-profile marketing events, including at CES in 2019.

Twitter Aurora was initially called Twitter Manifold, and was a spearheaded by the Office for Creative Research, commissioned by Twitter's Amanda McCroskery. The initial team involved many talents including Jer Thorp, Genevieve Hoffman, Chris Anderson, Marcus Pingel, Ryan Bartlett, A'yen Tran, and Will Lindmeier.

I joined Twitter #Studio in 2017 after the OCR had handed over the first version of the projet. I worked as a data scientist in support of existing initiatives, and progressively took on more responsibilities in developing new visualization tools.